We're living through the strangest linguistic moment. Everyone's terrified of sounding like AI, going through their own writing for telltale signs of ChatGPT fingerprints. The excessive em dashes—like this one. The "not this, but that" framing. The dreaded "here's the thing" opener that makes people cringe and immediately suspicious.

We have always used these constructions. We've always reached for emphasis, contrast, and conversational bridges. The only difference now is that we've become hyperaware of them, dissecting every LinkedIn post (and rolling our eyes at most of them).

Children's book author Divya Anand captured this perfectly when she lamented how ChatGPT has essentially "ruined" em dashes for writers—a punctuation mark that's crucial for authors but now feels tainted by AI association.

Overcorrection Wars

In response to this AI anxiety, I’m seeing a fascinating overcorrection unfold. People are deliberately making their writing more "human" by doing exactly what they think AI wouldn't do: abandoning proper capitalization, letting typos slide, making everything sound aggressively casual and personal. It's linguistic performance art. We're all method actors playing the role of "definitely not a robot."

And soon, this performative humanity will become the new normal. What started as deliberate imperfection is evolving into a genuine communication style. Today's intentional typo is tomorrow's standard grammar.

The Green Owl Effect

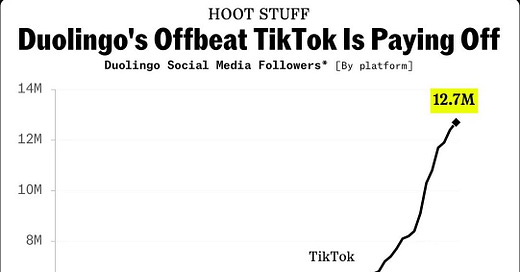

Remember when Duolingo went unhinged on social media (and it worked out for them?). Suddenly every brand discovered they could be sassy, threatening, and weirdly personal with returns in tow.

We're seeing the same pattern with "human-style" writing: what begins as a distinctive approach quickly becomes industry standard. Companies will conduct "authenticity audits" of their content, and the irony is delicious: we're systematizing spontaneity, creating templates for being untemplate-like. Marketing budgets dropped to 7.7% of revenue last year partly because nobody can agree on what authentic even means anymore.

When AI Actually Helps

For those of us who grew up in multilingual households but operate primarily in English, ChatGPT isn't the enemy, it's a lifeline. It helps bridge the gap when you know exactly what you want to say but can't quite find the English phrasing that captures your multilingual thoughts. It's like having a patient language exchange partner who never judges your grammar experiments.

The tool becomes less about replacing human creativity and more about unlocking it. It's scaffolding for complex ideas, a way to translate internal logic into external clarity. For many writers, especially those navigating language as immigrants or global citizens, AI offers freedom rather than replacement.

There is a big distinction to make: outsourcing writing is fine, but outsourcing thinking is dangerous territory. AI can help you find the right words for your ideas, polish your phrasing, or structure your arguments, but the ideas themselves need to stay yours. I'm all for AI making our writing clearer and more effective, but when we start letting it generate our actual thoughts and perspectives? Everything starts to look the same (and therefore becomes completely forgettable).

What's Next: The Psychology of Trust

The trust landscape is shifting in fascinating ways. Here are the psychological trends I'm watching:

The Bot Detector Blues: Audiences are developing pattern recognition for artificial communication. They can spot the too-perfect grammar, the slightly off emotional beats, the formulaic structures (and doubt your writing even if it's 100% human). This creates a new cognitive load where people are constantly scanning for authenticity markers.

The Humanity Olympics: As AI gets better at mimicking human quirks, humans develop new behavioral signals to prove their authenticity. Intentional typos and casual grammar become psychological peacocking for the digital age.

The Honesty Hack: People are more tolerant of AI use when it's explicitly stated, but less forgiving when they discover hidden usage. The cover-up feels worse than the original act—classic deception psychology.

Fake Fatigue Relief: Brands that openly embrace AI tools actually reduce audience stress. When everything feels potentially fake, honest disclosure provides psychological relief.

The Uncanny Valley Revenge: We're entering a phase where "too good" writing triggers suspicion. Polished prose now carries the burden of proof—is this human excellence or algorithmic perfection? The irony is delicious: we're punishing good writing because it might be artificial.

What This Means for Your Content

These psychological shifts have real implications for how we create and consume content:

Email campaigns will need to balance polish with personality: too perfect feels robotic, too casual feels unprofessional. What’s the sweet spot?

Research reports face the uncanny valley problem: comprehensive data and flawless formatting now trigger AI suspicion. Adding human commentary and acknowledging limitations becomes crucial for credibility.

Advertising campaigns experiment with transparency disclaimers ("AI helped us write this") and find that audiences respond better than expected. Honesty about tools becomes a competitive advantage.

Blog posts (like this one) benefit from personal touches: family references, cultural context, individual quirks that serve as authenticity anchors in an increasingly artificial landscape.

The most beautifully human thing about this entire mess? We're all improvising together, making it up as we go, trying to stay human in the process.

Until next time,

Shrikala